In the 1980s, microprocessor speeds grew exponentially relative to memory access times. It quickly became apparent that something had to be done to improve memory access speed and make the whole system more efficient. These discrepancies between processing speed and memory speed led to the development of cache.

The invention of the cache was one of the most critical events in the history of computing. But what exactly is it hiding? How does it work?

This small cache serves a dual purpose, having both an instruction cache and a data cache. The instruction cache deals with the operations that the CPU should perform and the data cache contains the information on which the process should be performed.

Then there is the L2 cache. L2 is slower and contains more information than L1. It contains between 256 KB and 8 MB of data that the computer will probably need to access next.

Finally, we see the L3 cache. This is the largest and slowest cache, storing from 4MB to 50MB.

When a program starts on your computer, data flows from RAM to the L3 cache, then L2, and finally to L1. As the program runs, the processor searches for the information it needs to run, starting in the L1 cache and working backwards from there. If the processor finds the necessary information, it is called a cache hit. If it can't find the information it needs, it's a cache miss and the computer has to look elsewhere to find the information it needs.

Latency is an important factor in the efficiency of a computer. Latency is the time it takes for information to be retrieved. The L1 cache is the fastest and therefore has the lowest latency. When a cache miss occurs, latency increases because the computer must continue to search through different caches to find the information it needs.

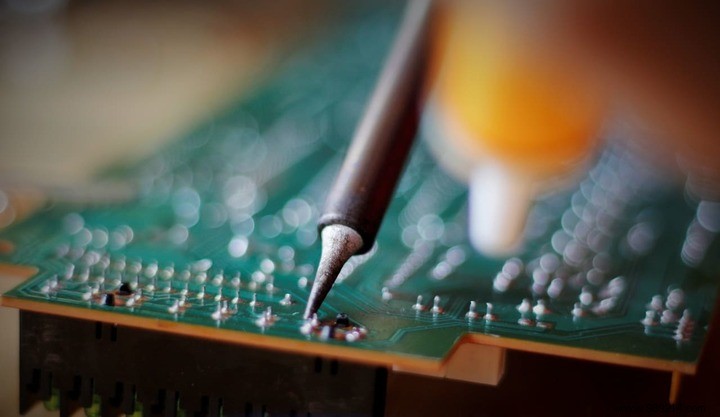

Newer computers have a much smaller CPU transistor size which has allowed a board to be built with more space to place the cache directly on top of. Physically placing the cache closer to the CPU reduces latency.

While cache isn't something computer vendors often point out, it's worth checking out. Faster caches will have less latency, making your programs faster and more efficient.